Major update to Monolith AI software recommends validation test plan

NTR, powered by the company’s proprietary active learning technology, aims to optimise this trade off by providing test engineers with active recommendations, in ranked order, of the most impactful new tests to carry-out for their next batch of tests, to maximise coverage and optimise time and cost.

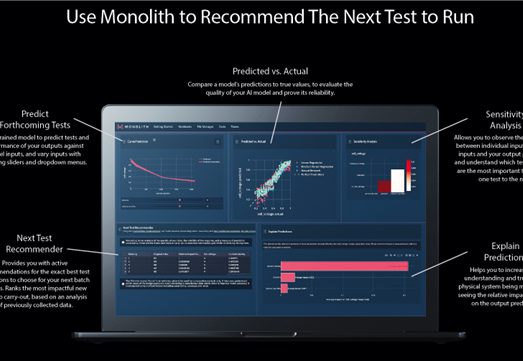

Monolith, artificial intelligence (AI) software provider to the world’s leading engineering teams, has released a major product update called Next Test Recommender (NTR). This new technology, now available in beta format in the no-code AI platform built for engineering domain experts, gives active recommendations on the validation tests to run during the development of hard-to-model, nonlinear products in automotive, aerospace and industrial applications.

As the physics of complex products in these industries become more and more intractable to understand, engineers find themselves in a dilemma, either conducting excessive tests to cover all possible operating conditions or running insufficient tests that risk the omission of critical performance parameters. NTR, powered by the company’s proprietary active learning technology, aims to optimise this trade off by providing test engineers with active recommendations, in ranked order, of the most impactful new tests to carry-out for their next batch of tests, to maximise coverage and optimise time and cost.

“Throughout our development process, we worked alongside our customers to understand how they would use an AI recommender system as part of their test workflow. We wanted to understand why they had not yet adopted such tools despite the well-known potential of AI to more quickly explore high-dimensional design spaces,” said Dr. Richard Ahlfeld, CEO and Founder of Monolith. “We found that existing tools didn’t fit their safety needs, and did not allow test engineers to incorporate their domain expertise into the test plan or influence the AI recommender.”

“Our R&D team has been working for months on this robust active learning technology that powers Next Test Recommender and we’re pleased with early results and feedback, with even better results expected as the technology matures,” Dr. Ahlfeld added.

NTR works for any complex system for which engineers are trying to safely explore the design space, such as aero map analysis for race cars or flight safety envelope of planes where engineers are trying to find where gusts or eigenfrequencies cause issues. Another growing area is in powertrain development, such as battery or fuel cell cooling system calibration. In the latter use case, an engineer trying to configure a fan to provide optimal cooling for all driving conditions had a test plan for this highly complex, intractable application that included running a series of 129 tests. When this test plan was inserted into NTR, it returned a ranked list of what tests should be carried out first. Out of 129 tests, as shown in Fig 1., NTR recommended the last test – number 129 – should actually be among the first 5 to run and that 60 tests are sufficient to characterise the full performance of the fan, a 53% reduction in testing.

Fig 1. The Monolith Next Test Recommender system recommended the last test – number 129 – should actually be among the first 5 to run and that 60 tests are sufficient to characterise the full performance of the fan, a 53% reduction in testing.

While available, open-source AI methods don’t allow an engineer to influence the test plan, a critically unique aspect of NTR is that it allows for human-in-the-loop inspection of the selected experiments, granting a domain expert user oversight of the system, combining their expertise and domain knowledge with the power of machine learning without any knowledge of AI or coding.

A recent Forrester Consulting study, commissioned by Monolith, found that 71% of engineering leaders need to find ways to speed product development to stay competitive and the majority (67%) also feel pressure to adopt AI. Remarkably, those who have are more likely to achieve increased revenue, profitability and competitiveness for their employers.

By leveraging AI and machine learning in the verification and validation process of product development, especially for highly complex products with intractable physics, engineers can extract valuable insights, optimise designs, and identify crucial performance parameters accurately. The result is enhanced operational efficiency and streamlined testing procedures, ultimately speeding time to market and strengthening competitiveness.

No-Code Software for Engineering Domain Experts

Monolith is a no-code AI software platform built for domain experts to leverage the power of machine learning with their existing, valuable testing datasets to speed product development. Self-learning models analyse and learn from test data to understand the impact of test conditions and predict a new test’s outcome ahead of time. The ability to attain active next test recommendations will further enable engineering teams to reduce costly, time-intensive prototype testing programmes and develop higher-quality products in half the time.

*Performance results range from 30-60% based on existing test plan efficiency.